Off hours we also get notified about critical alerts using a SMS/GSM modem.

Using default SCOM functionality we delay the sending of notifications by 5 minutes. This works fine for alerts with a “new” state.

However if an alert is closed within the 5 minute period a “closed” notification is sent out.

We do not want to see the closed alerts if an alert auto-resolved within the 5 minute time period. But if a new alert that has aged 5 minutes and sent to our GSM, we definately want to see that closed alert if it auto/manual resolves into the closed state (to make sure someone actually did something about the alert)

Using default SCOM functionality, this is not possible. This is why we came up with the following idea (special thanks to my colleague Frank):

- Using two seperate subscriptions, one for “new” alerts and one for “closed” alerts.

- On the new alert subscription set a channel with a powershell script to update custom field 1 when a SMS has been sent (this subscription has the 5 minute delay)

- On the closed alert subscription set a condition to check custom field 1 to see wheter a SMS has been sent or not.

1. The Command Notification Channel

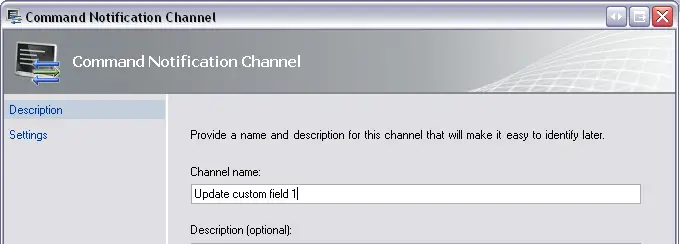

First we have to create a “Command Notification Channel”. Go to the “Administration” section of the SCOM management console. Click on Notifications->Channels.

Right click and select “New->Command…”.

The following wizard appears:

Give the channel a name, and click “Next >”

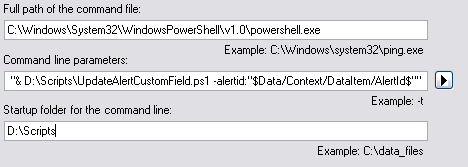

Enter the following settings for the channel:

Full path of the command file:

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe

Command line parameters: -Command “& D:\Scripts\UpdateAlertCustomField.ps1 -alertid:”$Data/Context/DataItem/AlertId$”"

Startup folder for the command line:

D:\Scripts

Change D:\Scripts to reflect your PowerShell script location. It should now look like this:

Save the changes by clicking “Finish”

2. The used PowerShell script

To modify alert “custom field 1″, I use a small PowerShell script. The text written into the field is “Notification sent out”

The used script is displayed here, save this script as “UpdateAlertCustomField.ps1″ in the directory specified in the command notification channel above.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 | # Get alertid parameterParam($alertid)$alertid = $alertid.toString()# Load SCOM snap-innadd-pssnapin "Microsoft.EnterpriseManagement.OperationsManager.Client";$server = "localhost"# Connect to SCOMnew-managementGroupConnection -ConnectionString:$server;set-location "OperationsManagerMonitoring::";# Update alert custom field$alert = Get-Alert -id $alertid$alert.CustomField1 = "Notification sent out"$alert.Update("Custom field 1 updated by UpdateAlertCustomField script") |

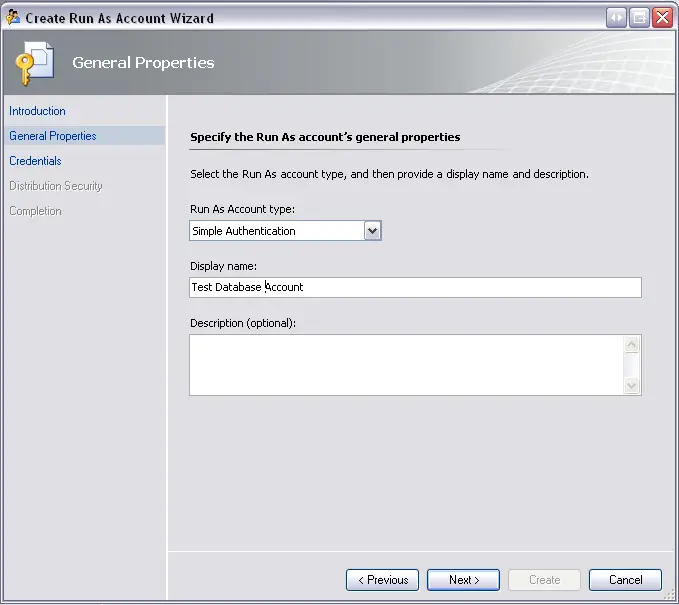

The next step is to create a subscriber which has the command notification channel created above assigned as channel.

Go to the “Administration” section of the SCOM management console. Click on Notifications->Subscribers.

Right click and click “New…”

In the “Notification Subscriber Wizard” give the new subscriber a name. In the next step of the wizard, specify your schedule as desired.

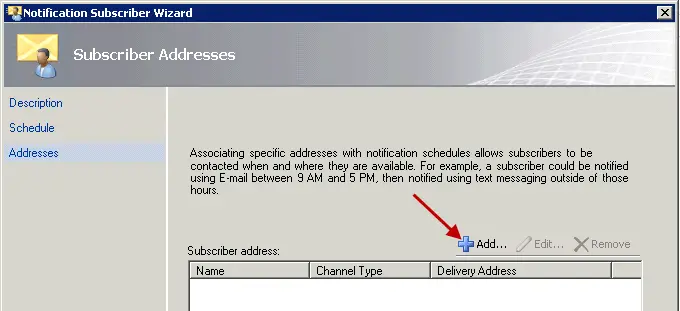

On the “Addresses” step, click “Add…” to add a new address.

In the “Subscriber Address” wizard, specify a name for the new subscriber. This can be virtually anything as no e-mails/pages/SMS messages are sent anyway.

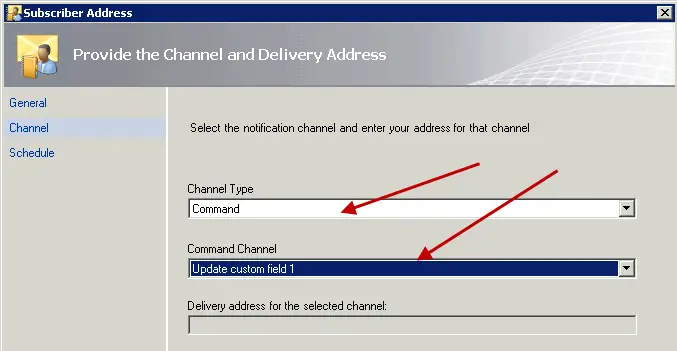

Next, specify the “Command” channel type and select the Command channel we created earlier (Update custom field 1).

Specify your schedule as desired, click “Finish” to end the wizard. Click “Finish” again to close the “Notification Subscriber Wizard”.

You should now have a subscriber with the command channel as asigned channel.

4. The subscription for new alerts

Now that we have the command notification channel, powershell script and subscriber ready. We can create a new subscription for new alerts.

Go to the “Administration” section of the SCOM management console. Click on Notifications->Subscriptions.

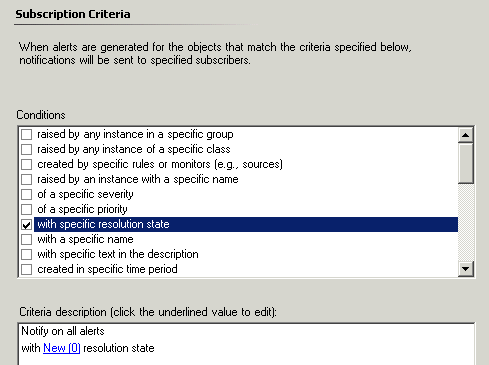

In the “Notification Subscription Wizard” specify a name for the new subscription. The next wizard step is the step to define criteria for the subscription.

Specify atleast the “with specific resolution state” criteria, offcourse you can add your own other criteria here like you would normally do.

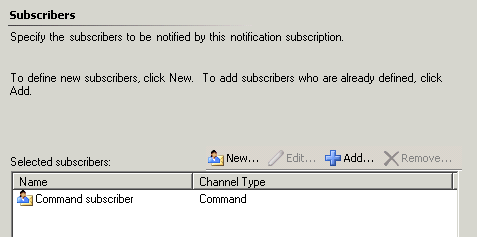

On the next wizard page (Subscribers) add the command subscriber we created in step 3, as shown below.

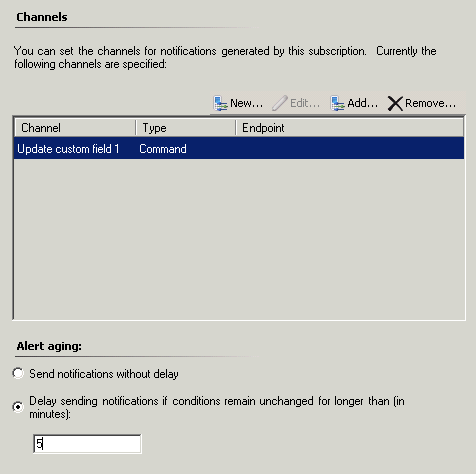

In the next wizard step (Channels) add the command channel we created in step 1 and specify the desired delay (5 minutes in this case). As shown below:

Click “Next”, in the summary step make sure “Enable this notification subscription” is checked and click “Finish”.

You should now have an subscription ready for new SCOM alerts.

5. Subscription for closed alerts

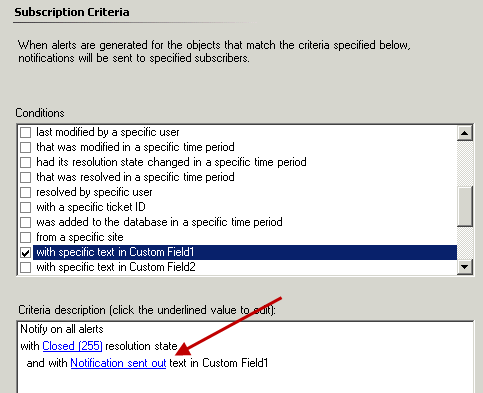

You can create the subscription channel like you would normally do. The only important step is to get the criteria right. We have to include custom field 1.

This is how the closed subscription criteria look:

NOTE: there is currently a bug in SCOM R2 when using custom fields in a subscription criteria!

For more information about thihs bug visit the following URL:

http://social.technet.microsoft.com/Forums/en/operationsmanagergeneral/thread/260be16a-0f45-4904-8093-7c1caa5ed546

You have to update the xml file each time you change something in either of the notifications!

Maarten Damen